Evaluation Model for MariaDB Xpand

This page is part of MariaDB's Documentation.

The parent of this page is: Architecture of MariaDB Xpand

Topics on this page:

Overview

This section describes how a query is evaluated in the database. In Xpand we slice the data across nodes and the query is sent to the data. This is one of the fundamental principles of the database that allows it to scale almost linearly as more nodes are added.

For concepts about how the data is distributed, please refer to "Data Distribution with MariaDB Xpand" as this page will assume an understanding of those concepts. The primary concepts to remember are that tables and indices are split across nodes, and that each of them has its own distribution that allows us to know, given the lead column(s), precisely where the data lives.

Parallel Query Evaluation Examples

Discussion

Let's go over the concepts that are involved in distributed evaluation and parallel processing of Queries.

Query Lifetime

User queries are distributed across nodes in Xpand. Here is how a query is processed:

The query is associated with a session on the node where it arrives, and that node orchestrates the concurrency control for it.

After parsing, the query goes through the query optimizer and planner where an optimal plan is chosen for it based on the statistics.

The plan is then compiled by the compiler, which breaks it into smaller query fragments and runs code optimizations.

The compiled query fragments are then run in a distributed way across nodes, and the results are returned to the node where the query started and back to the user.

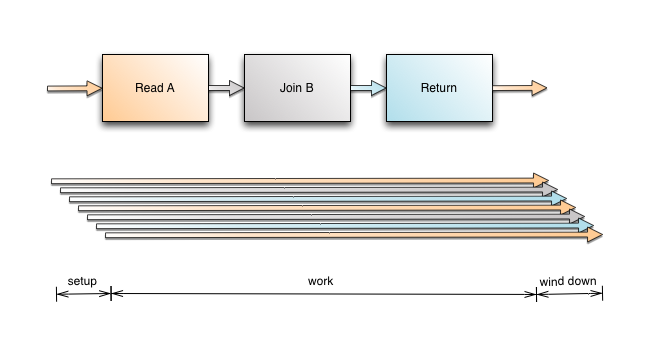

Using Pipeline and Concurrent Parallelism

Xpand uses both concurrent parallelism and pipeline parallelism for massively parallel computation of your queries. Simpler queries like point selects and inserts usually only use a single core on a node for its evaluation. As more nodes are added, more cores and memory are available, allowing Xpand to handle more simple queries concurrently.

More complex analytic queries, such as those involving joins and aggregates, take advantage of both pipeline and concurrent parallelism, using cores from multiple nodes and multiple cores on a single node to evaluate queries fast. Take a look at the following examples how queries scale to better understand the parallelism.

Concurrent parallelism is between slices, each of which may be on one or more nodes.

Pipeline parallelism occurs among rows in a single query. Each row in an analytic query might involve three hops for a two-way join. The latency added to one row is the latency of three hops, so set-up time is negligible. The other possible limit is bandwidth. As previously mentioned, Xpand has independent distribution for each table and index, so we only forward rows to the correct nodes.

How Do You Scale Evaluation in a Distributed System?

This table summarizes the different elements of a scalable distributed database as well as what it takes to build one:

Throughput | Speedup | Concurrent Writes | |

|---|---|---|---|

Logical Requirement | Work increases linearly with

| Analytic query speed increases linearly with

| Concurrency Overhead does not increase with

|

System Characteristic | For evaluation of one query

| For evaluation of one query

| For evaluation of one query

|

Why Systems Fail | Many databases use sharding-like approach to slice their data

| Many databases do not use Massively Parallel Processing

| Many databases used Shared Data architecture where

|

Examples of Limited Design | Co-location of indexes leading to broadcasts

| Single Node Query Processing leading to limited query speedup

| Shared data leading to ping-pong effect on hot data

Coarse-grained locking leading to limited write concurrency

|

Why Xpand Shines | Xpand has

| Xpand has

| Xpand has

|

Conclusion

For simple and complex queries, Xpand is able to scale almost linearly due to our query evaluation approach. We also have seen how most of our competitors hit various bottlenecks due to the design choices they have made.