How We Achieved 5 Million QPS at MariaDB OpenWorks 2019

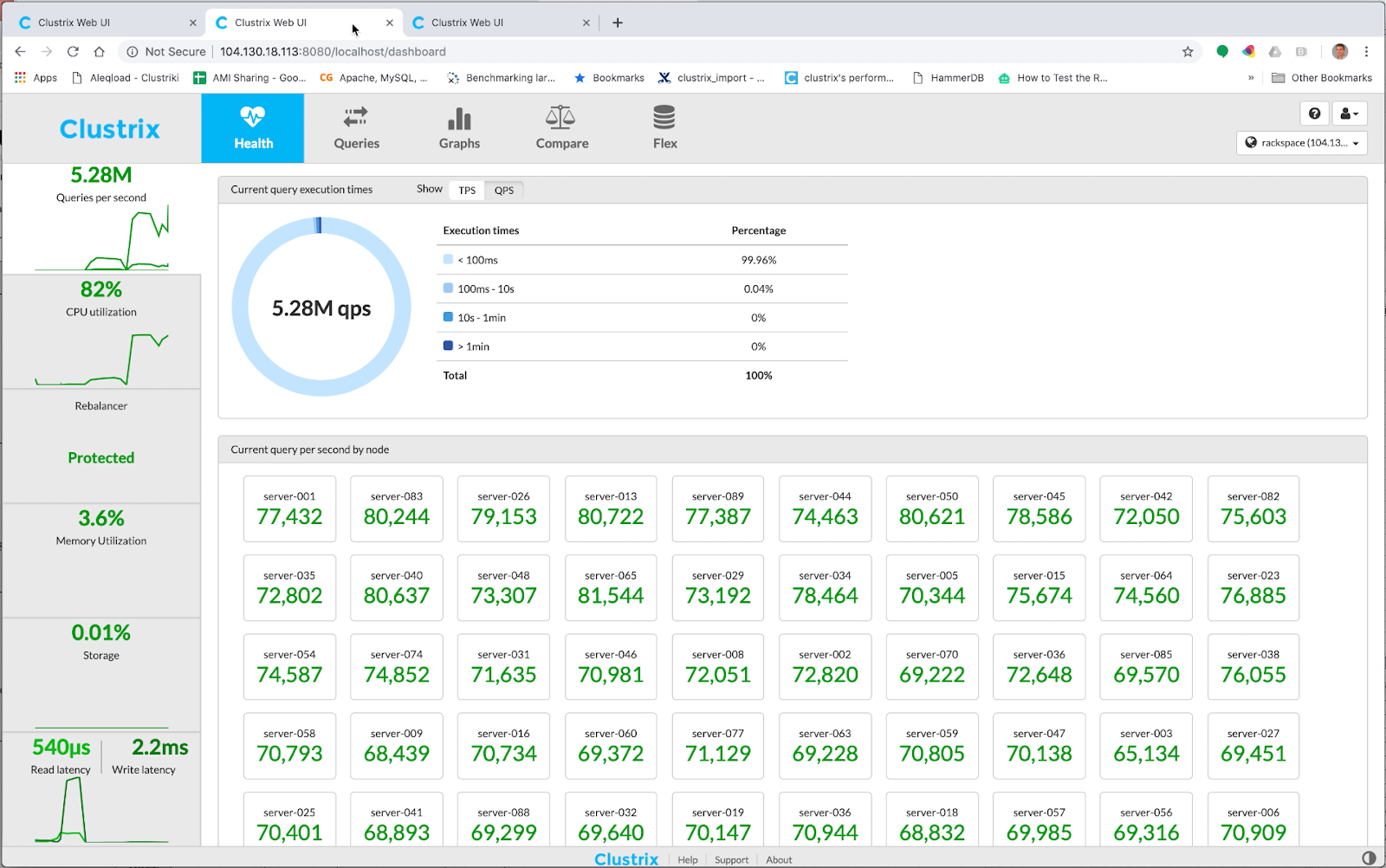

Last September, MariaDB acquired Clustrix for its distributed database technology. With ClustrixDB, if your application needs more scale from the database, it’s as simple as adding more nodes. At the MariaDB OpenWorks 2019 Conference in New York, we put that claim to the test. With the generosity and support of Rackspace, we demonstrated a live cluster running over 5.2 million queries per second (QPS) in sustained throughput using a 90:10 read/write transactional workload.

This cluster marks new milestones for ClustrixDB, representing the largest cluster ever configured, using 75 independent, bare-metal servers, with a total of 3,000 hyperthreaded cores and the highest sustained throughput ever recorded. Prior to this exercise, the largest cluster ClustrixDB had ran on was 64 nodes in a virtual cloud environment, utilizing only 8 virtual cores per node, or 1,024 total cores. The largest bare metal cluster prior to this was 32 nodes, 40 cores each, or 1,280 total cores.

Making all this happen was no easy task. Not only were we pushing ClustrixDB into new territory, but we were also pushing into new territory with hardware infrastructure. To reach the performance we desired, we knew we needed a lot of processing power. The trick was finding a provider who had that kind of available capacity. We tried working with several cloud providers, but only Rackspace was able and willing to deliver. The test was run in Rackspace’s Virginia region using 105 total servers. When we first started our testing, that was more server capacity than Rackspace had available in the region. Rackspace’s engineering team worked into the weekend installing new servers just to get us the capacity we needed. A big shout out of thanks goes to Rackspace for making it happen.

As this testing shows, ClustrixDB’s fully distributed and transactional RDBMS database system is built to handle the scaling of a large OLTP system. If you are interested in more information, you can check out the demo, as it occurred live, in the keynote address at MariaDB OpenWorks 2019. In addition, there was a separate breakout session at the conference explaining in greater detail ClustrixDB’s architecture and how it achieves scale-out performance. Watch the ClustrixDB session recording from MariaDB OpenWorks.

“Just the Facts”

The Hardware

The cluster was composed of 75 Rackspace OnMetal™ I/O v1 Servers.

CPU: Dual 2.8 Ghz, 10 core Intel Xeon E5-2680 v2 ( 40 hyperthreaded cores per server)

Ram: 128 GB

System Disk: 32 GB

Data Disk: Dual 1.6 TB PCIe flash cards

Network: Redundant 10 Gb/ s connections in a high availability bond

Driving the workload was 30 Rackspace OnMetal™ General Purpose Medium v2 Servers running CentOS 7.

CPU: Dual 2.8 Ghz, 6 core Intel Xeon E5-2620 v3

Ram: 64 GB

System: Disk 800 GB

Network: Redundant 10 Gb/ s connections in a high availability bond

The Software

Operating System: CentOS Linux release 7.6.1810

Database Software: ClustrixDB v9.2

The Workload

The demonstration was done using a variation of the Sysbench test harness configured with a 90:10 read/write transactional mix and 4,650 concurrent threads spread across 30 separate driver systems.

Test Software: sysbench 1.0.16 (using bundled LuaJIT 2.1.0-beta2)

Test Parameters: sysbench oltp_read_write –point-selects=9 –range-selects=false –index-updates=0 –non-index-updates=1 –delete-inserts=0 –table-size=10000000 –time=99999999 –rand-type=uniform